-

solutinos

-

Hire

Frontend Developer

Backend Developer

-

NodeJS Developer

-

Java Developer

-

Django Developer

-

Spring Boot Developer

-

Python Developer

-

Golang Developer

-

Ruby on Rails Developer

-

Laravel Developer

-

.NET Developer

Technology

-

Flutter Developer

-

React Native Developer

-

Xamarin Developer

-

Kotlin Developer

-

Cross-Platform Developer

-

Swift Developer

-

MongoDB Developer

-

C Developer

-

Smart Contract Developers

Cloud

-

-

Services

Mobile Development

Web Development

- Work

-

Multi Services App

-

Food Delivery App

-

Grocery Delivery App

-

Taxi Cab Booking App

-

Multi Services App

-

OTT Platform APP

-

Social Media APP

-

Freelance Service App

-

Car Rental App

-

Medicine Delivery App

-

Liquor Delivery App

-

Sports Betting App

-

Online Coupon App

-

eLearning App

-

Logistics & Transportation App

-

Courier Delivery App

-

On-Demand Real Estate App

-

E-Wallet APP

-

Online Dating App

-

Handyman Services App

-

-

Process

-

Company

Quick Summary : Many machine learning developers face a dilemma in selecting the best machine learning framework for their ML development projects. Multiple advanced machine learning frameworks are available in the market, including PyTorch, Apache Spark, and TensorFlow, as well as Caffe, Amazon Sagemaker, and Theano. ML developers may get overwhelmed by so many choices, and therefore, X-Byte’s curated list of the best ML frameworks for developers in 2025 is a helpmate to ease that choice.

Machine learning frameworks are a type of software ecosystem consisting of prebuilt components, pre-implemented algorithms, low-level mathematical operations, neural network architectures, and other tools that come together to help a machine learning developer in developing, training, and deploying robust ML models. They expedite the work of ML developers and ease the process.

However, developers have to select the best framework for machine learning that suits their development need for a specific ML model. Developers are spoiled for choice with extensive ML frameworks available in the market. But it all boils down to the kind of task you want to accomplish across different domains- NLP, vision, edge computing, structured data, and more.

From PyTorch to scikit-learn, here’s an extensive, no-fluff guide by our ML development services experts to help you pick the best ML framework today. The tools have been evaluated based on user-friendliness, performance, scalability, and community support.

Let's dive in!

What are Machine Learning Frameworks?

Machine learning frameworks provide pre-built and highly optimized components for developing and deploying ML models. Like mobile development frameworks that ease the work of mobile app developers, ML frameworks ease the work of ML developers. These frameworks are key in end to end AI and ML development services.

They support distributed computing, abstraction and are optimized for specific hardware. These frameworks also have extensive libraries and developer tools (community support too), to accelerate machine learning development.

Advanced ML frameworks also feature model serving, making it easier to deploy models in production environments and also offering built-in visualization tools for model architecture, training progress, and results analysis.

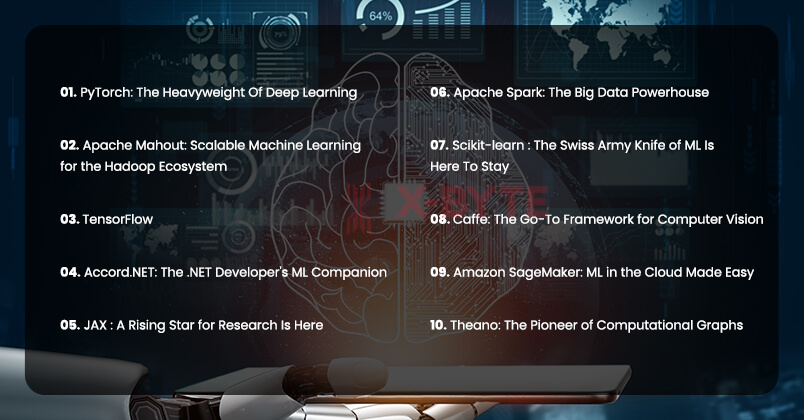

10 Best Frameworks for Machine Learning in 2025

PyTorch: The Heavyweight Of Deep Learning

Equipped with robust ecosystems, PyTorch remains an ML learning titan. PyTorch is inarguably a favorite among researchers due to its simplicity. Whether building neural networks on the go or debugging, PyTorch allows you to pull immediate results with an intuitive syntax. One can write codes like NumPy but with solid GPU power under the hood.

PyTorch has an imperative style, which makes it great for experimentation. There is no need for graph compilation, as you can print() intermediate results or use the Python debugger directly. Besides, it offers native support for distributed training, aka PyTorch’s Distributed Data Parallel.

PyTorch, originally by Facebook and now operating under the PyTorch Foundation, has a vibrant and fast-growing community. Extremely popular for research and academic needs, it sees active development with thousands of contributed libraries for text, graphics, vision, and others.

Why choose PyTorch:

- A perfect framework for deep learning beginners with a slow learning curve

- Has a straight-forward approach for training models

- Dynamic graphs are a delight to use

- PyTorch 2.0 has a new compile mode to speed up model optimization, hardware-specific acceleration, and quantization

- Seamless deployment support for edge devices and mobile

Apache Mahout: Scalable Machine Learning for the Hadoop Ecosystem

Apache Mahout is a tool for big data analysis and machine learning. It's especially useful for companies that already use Hadoop, which is a system for storing and processing large amounts of data across many computers. While it may not be as popular as some newer frameworks, Mahout still holds its ground in specific big data scenarios. It can work with big data. It can team up with systems like Apache Spark and Apache Flink to process data faster.

Why use Apache Mahout:

- Designed for scalability with distributed algorithms

- Integrates well with the Hadoop ecosystem

- Supports a wide range of ML algorithms, including clustering, classification, and collaborative filtering

- Offers a Scala-based DSL for easier algorithm implementation

- Useful for organizations already using Hadoop for data processing

TensorFlow

Now comes Google's TensorFlow, an industry veteran dating back to 2015, which has been empowering massive production systems, chiefly for academic research. With the adoption of Keras high-level API, TensorFlow has made model building a lot easier. The eager execution is on by default, which means it mimics PyTorch in practice while still allowing the composing of high-performance static graphs.

Nevertheless, the framework is a tad bit complex compared to PyTorch, as there is more than one way to solve a problem. So, it's advised to spend a little bit of time on Stack Overflow during your initial days of working with TensorFlow. But this baby shines when it comes to large-scale production systems, allowing easy scale-up from single GPU to multi-node TPU pod.

Also, TensorFlow has a rewarding community and an equally good resource pool with endless courses, books, tutorials, and GitHub repos. TensorFlow's community is a lot more mature than PyTorch, which means that for any debugging or model implementation needs, there's a good chance the solution is already on Stack Exchange or GitHub.

Why use TensorFlow:

- Great framework for anyone who wants to explore a step above PyTorch

- Has extensive documentation and tutorials to help newbies

- Keras integration brings forth improved usability

- TensorFlow 3.0 is a game changer for distributed training using model, pipeline, and native parallelism

Many leading machine learning teams use a hybrid approach, using PyTorch for raw research and prototyping and then converting it to TensorFlow for deployment. Tools like ONNX have certainly made life easy. Both PyTorch and TensorFlow are battle-tested frameworks. So, you can never go wrong with either of them.

Struggling to keep up with rapidly evolving ML technologies?

Our ML development services ensure you're always at the cutting edge.

Accord.NET: The .NET Developer's ML Companion

For developers deeply entrenched in the .NET ecosystem, Accord.NET is a breath of fresh air. It brings advanced math and machine learning to C# and F# programming languages. This framework isn't just for one or two things - it helps with many areas of complex computing, like:

- Working with statistics

- Understanding images (computer vision)

- Processing sound

What sets Accord.NET apart is its seamless integration with existing .NET applications. Whether you're building a desktop app or a web service, you can easily incorporate machine learning capabilities without leaving your familiar development environment.

Why use Accord.NET:

- Native .NET support for machine learning and scientific computing

- Comprehensive library covering various ML algorithms and statistical methods

- Excellent documentation and code samples

- Ideal for Windows-centric development environments

- Regular updates and active maintenance

JAX : A Rising Star for Research Is Here

As a new player on the block, JAX has been gaining a good amount of traction among researchers. For those who haven't been introduced to JAX, here's a cue. Imagine NumPy getting an upgrade. Then, watch it run over GPUs/TPUs, taking automatic differentiation a notch above that's JAX!

Incepted by Google Brain, JAX brings high-performing numerical computing libraries to machine learning. Sure, it's not as user-friendly as PyTorch or TensorFlow, but there are other reasons why ML experts have fallen for it. For starters, JAX uses the Just-In-Time (JIT) compilation for code optimization. All you have to do is write the math in Python. JAX can seamlessly compile the parts to a swift machine code using lightning-fast execution for different hardware platforms.

Despite all the goodness, JAX can feel like a low-level ML framework without add-ons like Haiku or Flax. But JAX's unique approach to managing explicit random states with no implicit global graph is impressive. For seasoned developers, JAX could be a commendable tool when speed and functional style are of the essence.

Why use JAX:

- JAX is built with Google’s TPUs in mind, offering seamless integration with GPUs/TPUs for speed.

- JAX’s library is capable of automatic vectorizing of your code (vmap), and parallelizing across devices (pmap).

- It allows gradients of any Python function (grad) and transforms it into a powerful advanced research tool.

- JAX treats your training model and loop as pure functions, making way for easier reasoning and functionally-styled code for all math-heavy tasks.

Apache Spark: The Big Data Powerhouse

Apache Spark is one of the most powerful machine learning tools for handling big data. While it's not just for machine learning, it has a special part called MLlib that's great for doing machine learning on large amounts of data spread across many computers and distributed machine learning tasks. It seamlessly integrates with Hadoop ecosystems and supports multiple programming languages like Scala, Java, Python, and R.

Spark is particularly useful for machine learning algorithms that need to go over the data many times. Its flexibility in handling different types of data processing makes it a popular choice for many different machine learning projects–from recommendation systems to real-time fraud detection.

Why use Apache Spark:

- Excellent for big data processing and distributed machine learning

- Supports both batch and stream processing

- Offers a wide range of machine learning algorithms through MLlib

- Integrates well with other big data tools and cloud platforms

- Active community support and continuous development

Scikit-learn: The Swiss Army Knife of ML Is Here To Stay

Anyone who’s had some training in machine learning using Python must have come across scikit-learn. Although technically not a framework, Scikit-learn or sklearn is a tried-and-tested library with an endless collection of algorithms for a range of tasks-regression, clustering, feature engineering, classification, and whatnot. Scikit-learn is essentially designed for medium-scale data and in-memory computations. Multiple algorithms in scikit-learn can be implemented using C or Cython.

Why use Scikit-learn:

- It offers a consistent API (.fit, .predict, .transform methods on models) and unmatched documentation.

- It’s incredibly lightweight and does not require GPUs.

- It has the most popular machine learning library maintained by open-source contributors with Stack Overflow questions and cheat sheets.

Caffe: The Go-To Framework for Computer Vision

Caffe is a special tool for working with images in machine learning platforms. It was first created by researchers at Berkeley University.. It's particularly popular for image classification and segmentation tasks. It's incredibly fast. Using a powerful computer chip (NVIDIA K40 GPU), it can process over 60 million pictures in just one day! While Caffe might not be as flexible as PyTorch or TensorFlow for general-purpose deep learning, it shines when it comes to deploying models in production environments, especially for vision-related tasks.

Why use Caffe:

- Optimized for computer vision tasks

- Excellent performance and speed for image processing

- Rich ecosystem of pre-trained models

- Easy model deployment in production environments

- Strong support from the computer vision community

Amazon SageMaker: ML in the Cloud Made Easy

More and more companies are moving their work to the cloud. In this shift, Amazon SageMaker has become a very useful tool for working with machine learning (ML). It's not just a single program but a whole set of tools that make ML work easier from start to finish. SageMaker provides jupyter notebooks for data exploration, built-in algorithms, and support for bringing your own algorithms. What sets it apart is its seamless integration with AWS services, making it easy to handle large datasets, distribute training across multiple instances, and deploy models as scalable endpoints.

Why use Amazon SageMaker:

- End-to-end platform for the entire ML lifecycle

- Seamless integration with the AWS ecosystem

- Built-in algorithms and support for custom frameworks

- Automatic model tuning and optimization

- Scalable infrastructure for training and deployment

Is complex AI/ML integration slowing down your project timeline?

Let X-Byte’s ML development services accelerate ML deployment.

Get Started! Get Started!Theano: The Pioneer of Computational Graphs

While Theano's active development has ceased, its impact on the machine learning platforms cannot be overstated. It pioneered the use of computational graphs for machine learning, a concept now central to frameworks like TensorFlow and PyTorch. Theano excels in numerical computation, particularly for problems involving large, multi-dimensional arrays. Its ability to leverage GPUs for faster processing made it a favorite among researchers, especially in the early days of deep learning.

Why use Theano:

- Efficient numerical computation, especially for array operations

- Strong support for GPU acceleration

- Automatic differentiation capabilities

- Extensive documentation and academic usage examples

- Historical significance in the development of modern ML frameworks

The above ML frameworks address all the challenges with ML frameworks that developers might encounter with ML frameworks and ensure high-quality and seamless ML development. Apart from the above discussed top 10 ML frameworks, we would also like to add two more to the list. These two are also the latest machine learning frameworks that developers need to keep on their list.

XGBoost and LightGBM: A Fine Ensemble

Have you ever followed Kaggle competitions or built ML models for structured data? Chances are you’ve encountered gradient-boosting machines. Algorithms like XGBoost and LightGBM win most competitions as they deliver remarkable results with tabular datasets, thereby overshadowing deep neural networks. By all means, they have earned their place in this list of the best ML frameworks for developers in 2025.

XGBoost helps implement gradient-boosted decision trees. It is remarkably fast, with options for histogram-based splitting and parallel tree construction optimizations. So, you can seamlessly handle large datasets and have built-in routines to avoid overfitting.

On the other hand, Microsoft’s open-source gradient boosting framework, LightGBM, uses tree-based learning to optimize lower memory usage. Compared to XGBoost, LightGBM is faster and lighter, which helps when you have too many features to work with. It also supports Python API and sklearn integration.

Ushering a New Era Of Transformer Ecosystems With NLP and Language AI

Thanks to emerging Transformer models, natural language processing has witnessed a huge explosion in recent years. With pre-trained language models like GPTs and BERTs, we have a better story to tell every minute. NLP tooling is also on an evolving spree, especially in 2025, which is why one is more likely to tap into the Hugging Face ecosystem and associated frameworks.

The Hugging Face Transformers library offers a unified interface with endless pre-trained models. Thus, developers can easily download models like T5 and GPT-2 using a single line of code. They can be used extensively for tasks like text summarization, translation, classification, and more.

Besides, the HuggingFace model hub hosts well over 500,000 models, giving a humongous community-contributed collection covering every aspect you can imagine. Besides HuggingFace, spaCy is another excellent option for production-level NLP pipelines, especially for exclusive needs like entity recognition and tagging parts of speech.

Functional ML Frameworks for On-Device ML

Machine learning isn't limited to cloud servers alone. The current trend is pushing things to edge devices, from smartphones to AR/VR devices. It's advantageous to run AI on-device due to privacy and low latency. Still, the memory and limited commute remain a bummer. That's where edge deployment frameworks like TensorFlow Lite come in. Powered by Google, TensorFlow Lite is widely used for Android apps and IoT projects.

For the Apple ecosystem, there’s Core ML that uses a model format and API using the Neural Engine or GPU for acceleration. By the end of 2025, CoreML will have extended its support to a range of models like CNNs, RNNs, and transformers automatically using either 8-bit quantization or 16-bit floats.

Then, there's PyTorch Mobile to export mobile models and provide a runtime in C++ or Java. Lastly, we have ONNX Runtime, which is more of a general deployment framework for multiple platforms. For TinyML microcontrollers, Edge Impulse is a great end-to-end platform supporting data collection and small model training.

Wrapping Up

By all means, 2025 is set to witness richer ecosystems, making way for more robust machine-learning frameworks. Whether it's detecting anomalies or designing a convolutional neural network, there's an ML framework that you can count on. This guide was meant to help you choose the right ML framework for your machine learning platform development.

At X-Byte Solutions, we have a knack for staying framework-agnostic, which means we are not dependent on a single ML development tool or framework. Instead, we evaluate a project's unique needs and decide on the best way forward. Doing so helps us stay abreast of industry changes, design a sustainable product, and deliver maximum leverage to our clients.

Partner with X-Byte for Expert ML Development Services Today!